Troubleshooting Stable Diffusion: Resolving Stable Diffusion Errors and Solve AUTOMATIC1111’s web UI errors and tips for performance

Installing and running Stable Diffusion can be a frustrating experience, often resulting in unexpected errors. It’s natural to wonder why things can’t be easy and work flawlessly on the first try. However, I’m here to save you time and provide solutions based on my personal experience with resolving Stable Diffusion Errors. I’ve navigated through forums, conducted trial and error, and gathered effective solutions to help you overcome these challenges.

If you’re encountering difficulties installing Stable Diffusion on your Windows computer, you can refer to my step-by-step guide. Now, let’s dive into the Stable Diffusion Errors and their solutions:

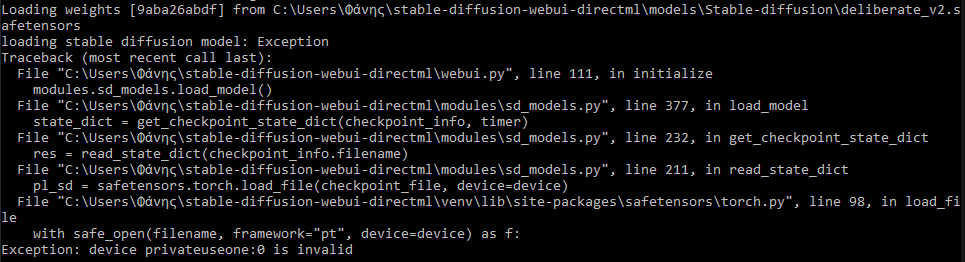

- Can’t load safetensors: To load models with a “.safetensors” extension, add the line “set SAFETENSORS_FAST_GPU=1” in the “webui-user.bat” file. However, note that safetensors cannot be used with the –lowram option, as it will cause an error.

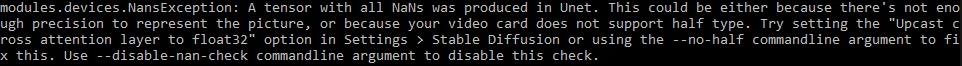

- NansException: If you encounter this error, add –disable-nan-check along with other command-line arguments. This error might occur when using –opt-sub-quad-attention.

- Black image: After using –disable-nan-check, you may experience the issue of generating black images. To address this, try using –xformers if you have an NVIDIA GPU. To utilize this option, install “xformers” by opening a terminal and typing “pip install xformers.”

In general, to resolve this error, include –no-half in the command-line arguments, typically used in conjunction with –precision-full or –precision-autocast. Using –no-half and –precision-full forces Stable Diffusion to perform all calculations in 32-bit floating-point numbers (fp32) instead of 16-bit floating-point numbers (fp16). The opposite setting, –precision-autocast, uses fp16 where possible. While full precision may yield better results, it might require more time. The default setting is to use fp16 for faster processing, accepting reduced variation in outcomes.

- Not enough memory: If you have low VRAM (video random access memory), you might encounter this error. To address it, add –lowvram to the command-line arguments if you have 4-6GB of VRAM, or –medvram if you have 8GB of VRAM. These options conserve memory but may result in slower generation, effectively limiting the occurrence of this error. If the error persists, you may need to remove other options or add –no-half if not already present.

Bonus:

Performance tips: For NVIDIA GPU users, installing and utilizing –xformers is recommended to enhance performance. To achieve the fastest image generation, start with no arguments (or start with –xformers if you have an NVIDIA GPU) and gradually add more arguments as you encounter errors.

You can experiment with options such as –opt-sub-quad-attention, –opt-split-attention, or –opt-split-attention-v1, as they contribute to improved performance. –opt-sub-quad-attention is particularly beneficial for the DirectML backend (AMD GPUs). However, be aware that adding –disable-nan-check may lead to black image generation. In such cases, include –no-half and –precision-autocast to resolve the issue.

Additionally, you can load any model’s weights directly by adding the argument –ckpt models/Stable-diffusion/<model>, supporting both “.ckpt” and “.safetensors” extensions.

In summary, I’ve invested considerable time in finding and testing these solutions through various sources and approaches. These options have successfully resolved the errors I encountered, and I hope they prove helpful to you as well

Stay tuned with 247 prime news for further updates.